Surveys are critical business tools for gathering customer feedback. This feedback can yield actionable insights and drive decision-making. However, the value of survey responses hinges on their reliability and accuracy.

This article will explore the different types of survey response bias and identify effective strategies to help you address and mitigate its impact. This will ensure the accuracy, reliability, and validity of your survey results so you can get the most out of your research efforts and focus on making beneficial consumer-conscious decisions.

What is survey response bias?

Response bias is the tendency to provide inaccurate, misleading, dishonest, or false answers to self-report questions. This endangers the validity of research and, therefore, the accuracy of any subsequent data analysis for your business.

Bias can be caused by several factors, including the tone/phrasing and order of survey questions, the respondent's desire to answer as they ‘should’ and comply with what they perceive to be a research project's aim or social desirability, or simply a lack of interest.

Response bias typically arises in questionnaires that focus on individual opinions or behaviors. Given the huge influence public perception has on our lives, survey participants tend to respond in a way that will allow them to be perceived positively.

Types of survey response bias

While ‘response bias’ acts as an umbrella term for all inaccurate survey responses, there are many different individual types. Each one could skew or even invalidate your survey data. Here’s a more detailed exploration of the individual types of response bias that you should look out for:

1. Sampling bias

Sampling bias occurs when a difference exists between those who responded to your survey and those who didn’t. This can happen when certain people from your chosen population are more likely to complete the survey than others. If those who responded all share a common characteristic, your sample won’t represent a random selection of your customer base, and your results will be inaccurate from the offset.

Your sample would, therefore, be skewed toward a younger demographic and those more engaged with your brand than your overall target population. This would produce an inaccurate and unreliable picture of overall customer sentiment.

2. Acquiescence bias

This describes the tendency for respondents to select positive response options to questions and statements disproportionately frequently, regardless of their own opinions or the question itself.

For example, participants might respond favorably to questions in your product survey involving new ideas because they think that’s what researchers want to hear.

This sort of bias can also surface when you ask respondents to confirm a statement in the form of a ‘leading question’ or if you only supply binary answer options to questions, like True/False, Agree/Disagree, and Yes/No. This type of question and answer would be phrased like, Would you say that our new product rollout was successful? Yes/No.

An acquiescence bias example

3. Demand bias/characteristics

This occurs when participants respond based on what they understand about the research agenda rather than their own behaviors and opinions. As with acquiescence bias, participants might have been able to identify researchers’ aims and expectations through clues like your brand name, the survey title, your question/answer phrasing, and how researchers interact with participants.

Let’s say that you’re an online fashion retailer looking to launch a mobile app. You use your brand logo on your survey entitled Mobile app and ask your customers questions like, How often do you shop online from your phone? A: Every day, a few times a week, rarely, never.

From the example above, participants can easily figure out the study’s purpose, and this may bias their responses and render findings meaningless.

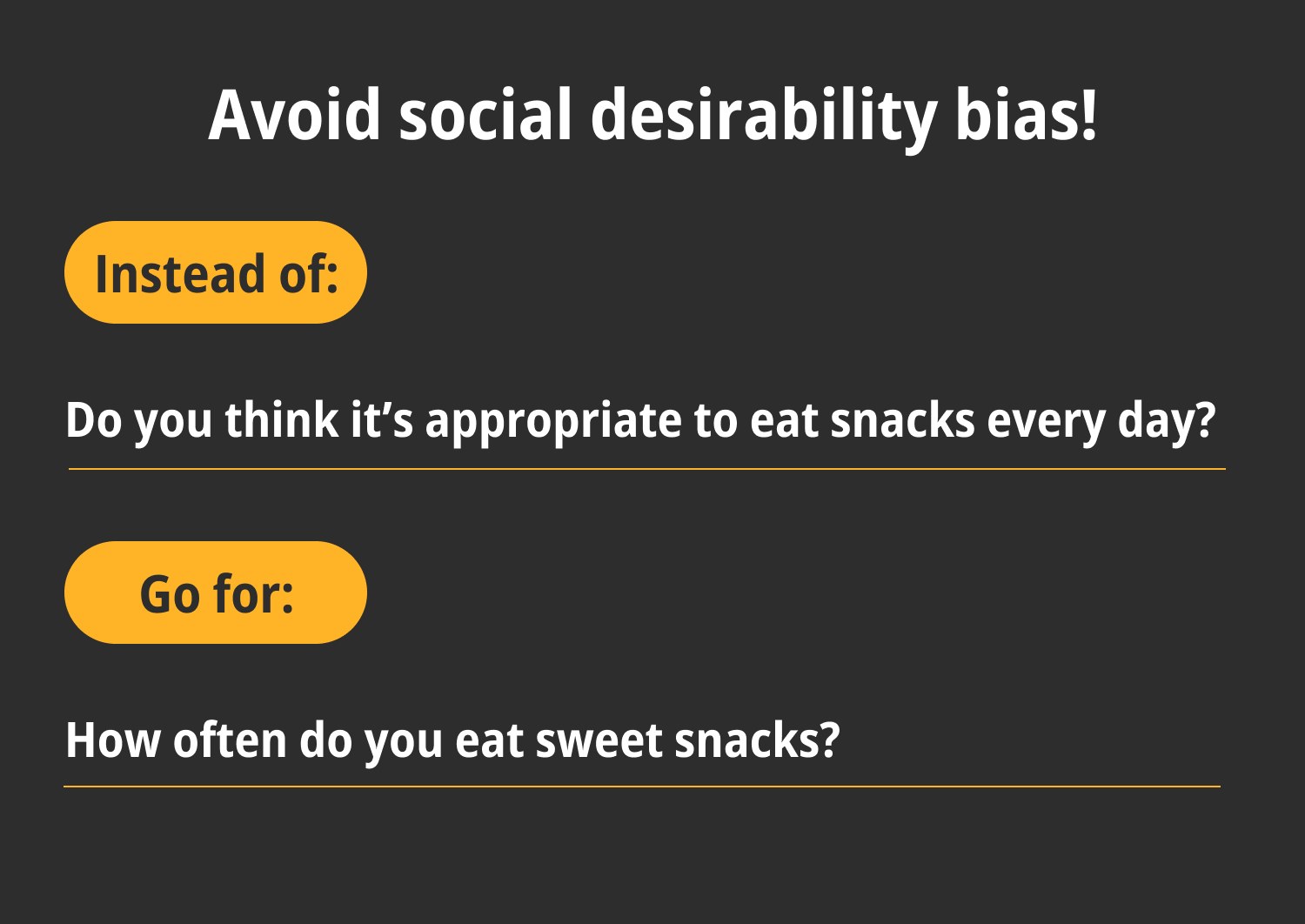

4. Social desirability bias

This refers to respondents’ tendency to adjust their answers to align with social norms and expectations. Subjects prefer to report ‘good behavior’ and suppress ‘bad behavior.’ Poorly worded, leading questions or personal/sensitive topics, such as diet, income, politics, religion, and health, often trigger socially desirable answers.

Let’s say you’re a food business looking to test demand for a sugar-free brownie. If you ask respondents questions like, Do you think it’s appropriate to eat sugary snacks every day? those who should answer positively may feel inclined to lie, fearing that they may be perceived negatively by others or the researcher.

A social desirability bias example

5. Question-order bias

This is when the context of previous questions shapes participants’ responses to later questions. The first question may influence respondents’ opinions on a topic and cause them, thereafter, to answer questions inaccurately to maintain consistency across the survey.

Let’s say question one asks, Would you rank flavor as the most important factor in selecting a sweet snack? followed by, Would you consider purchasing a healthier alternative to your usual sweet snack?.

If someone answers positively to the first question, they may now feel more reluctant to respond positively to the second. However, if asked the second question first, they might have viewed the healthy option more neutrally or even favorably.

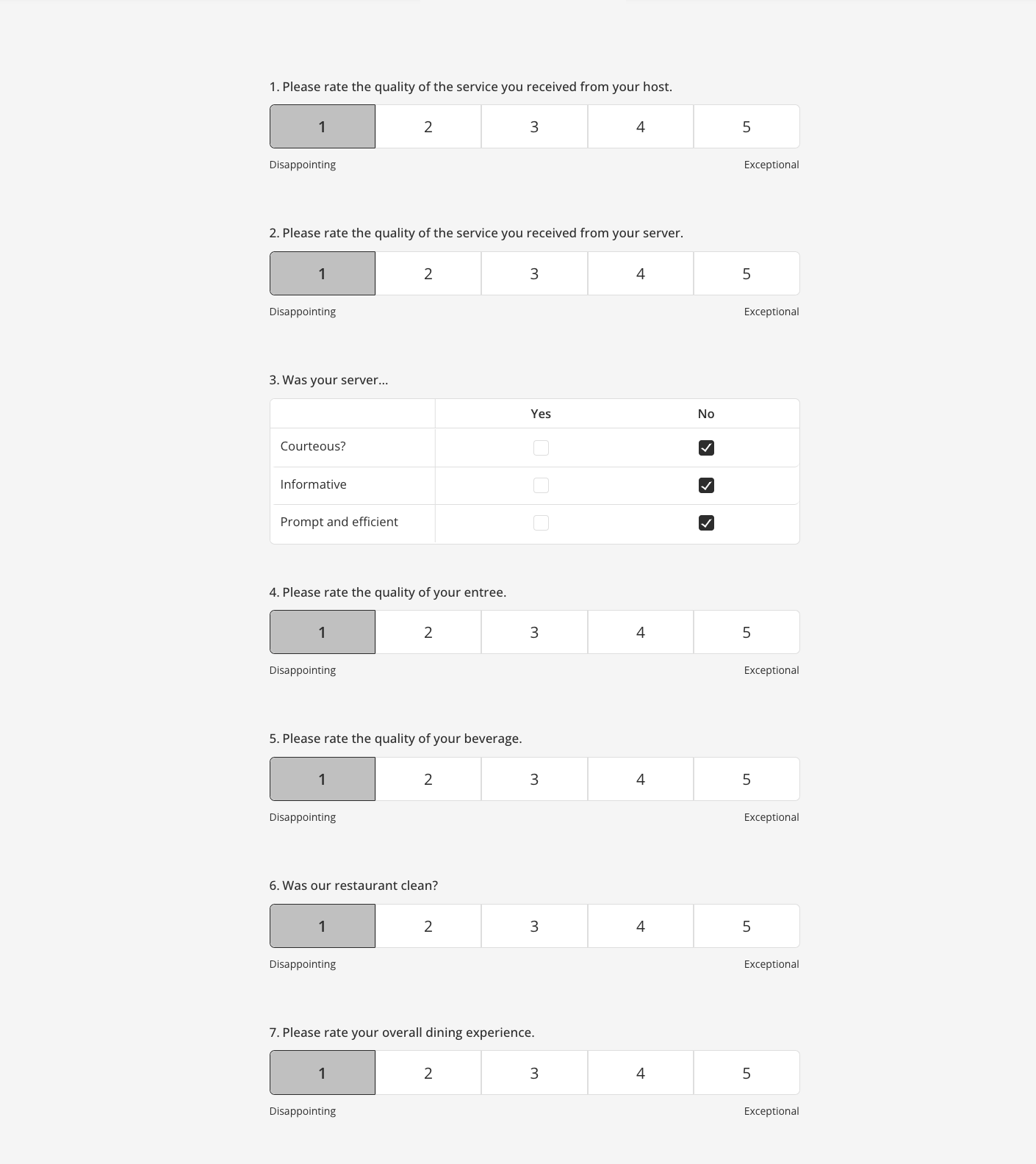

6. Extreme response bias

This refers to participants’ practice of marking all responses with one, usually extreme, answer, even if this doesn’t reflect their opinions on a topic. This often occurs when respondents get bored because a survey is too long.

If respondents simply select Strongly agree as their answers to a variety of questions like, How would you rate the quality of our service? Do you find our social media content engaging? Do you find it easy to make bookings on our website? it’s likely that they’re trying to get through the survey as quickly as possible.

An extreme response bias example

Strategies to address survey response bias

Now that we’ve covered the most common and detrimental types of survey bias and how these can skew your data, invalidate your research efforts, and waste resources, let’s examine the strategies you can use in building your survey to reduce the occurrence of bias.

1. Careful questionnaire design

Many of the types of bias we’ve examined are caused by poor survey design. Therefore, you must craft your questionnaire meticulously and focus on writing good survey questions to minimize bias and ensure validity.

This involves using neutral language, avoiding leading or complex questions, and creating clear, easily understood prompts.

Respondents can’t give accurate results if they’re not sure what you’re asking. Make sure to pose your questions using simple language, short words, and common phrases to eliminate the possibility of participants' misunderstanding.

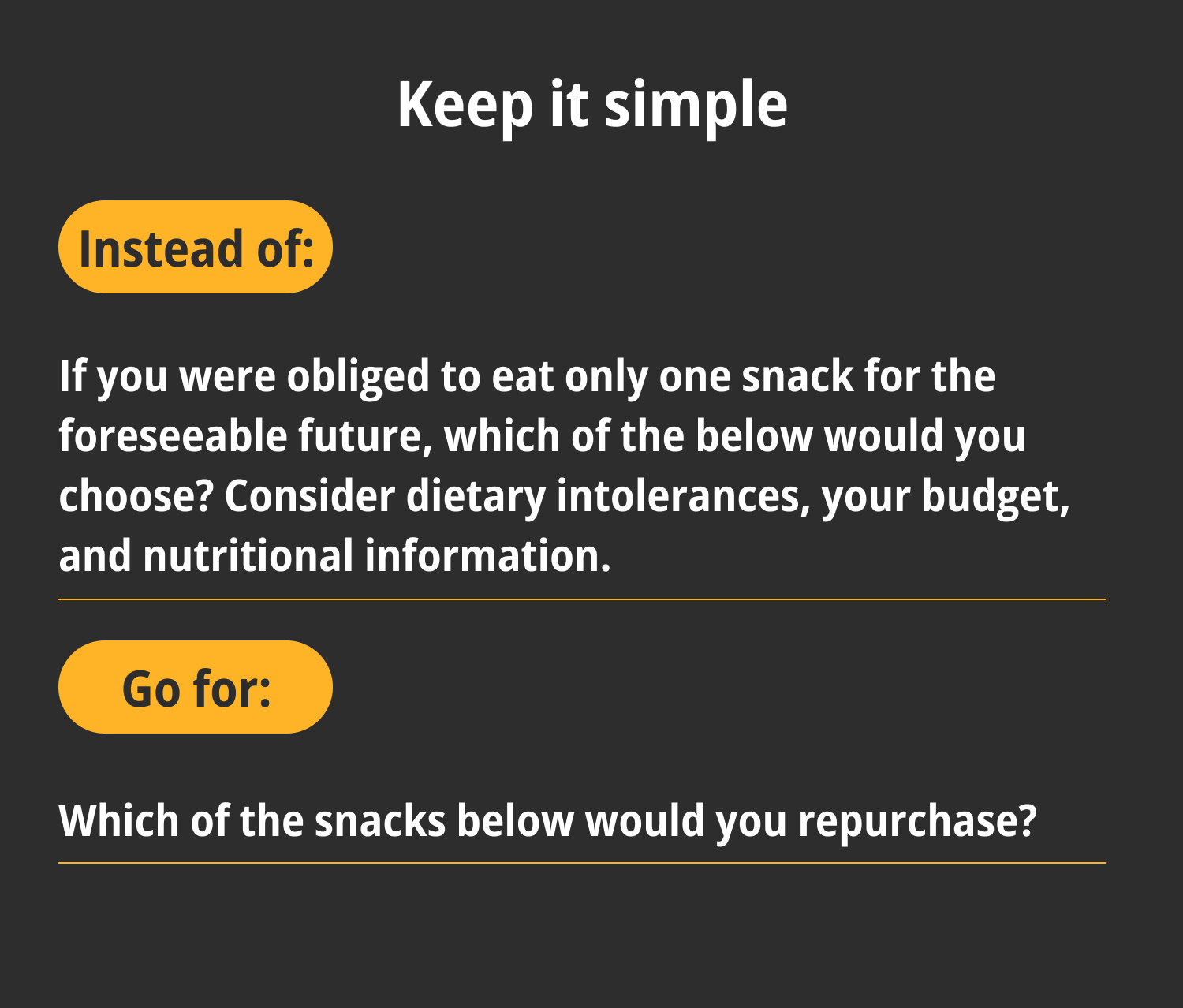

Keep the questions simple

If the topic you’re researching requires specialized knowledge or context to make it as clear as possible, including a small amount of information along with the survey will help you ensure that your survey participants stay on board.

Stick to a couple of short paragraphs at maximum, though. Presenting respondents with pages of information might drive them to select incorrect responses to finish the survey quickly.

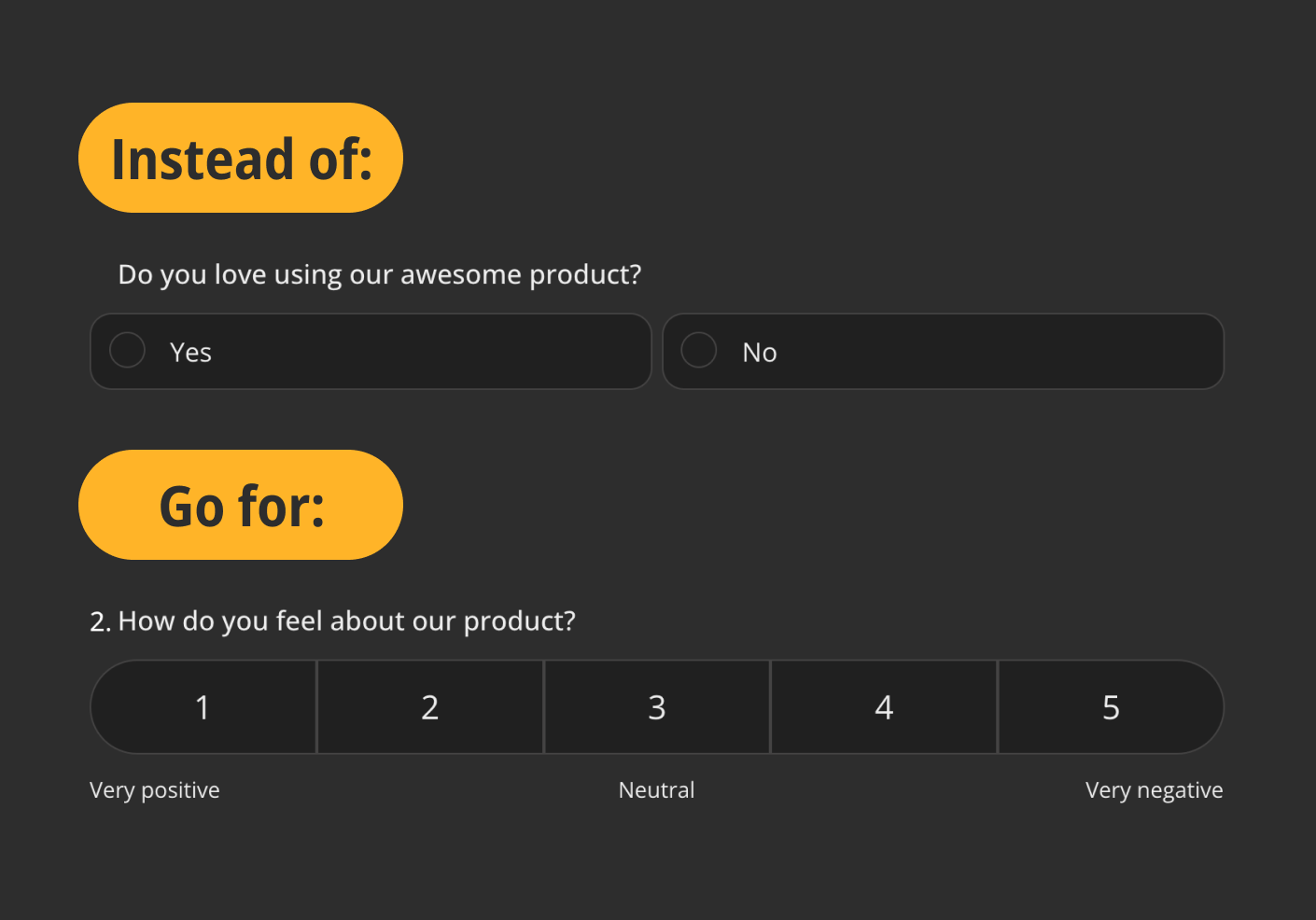

You must also avoid presenting participants with leading questions. To do so, ensure that you structure your questions openly and word them neutrally, avoiding the use of unnecessary adjectives.

A question like, How do you feel about our product? alongside a range of answer options, allows for more freedom of response than, Do you love using our awesome product? Yes/No.

Avoid leading questions

2. Randomized questions and response options

To mitigate order bias, you should vary the order of questions and answers. This will ensure that responses aren’t influenced by the sequence in which questions or options are presented.

To aid in this process, online survey makers, like forms.app, offer advanced features, such as a shuffling option for response options. Randomizing the order of potential answers will enable you to avoid order bias with ease.

3. Inclusion of all potential respondents

To avoid sampling bias and gather data that provides an accurate reflection of the population you’re studying, you must ensure that every individual within your target demographic has an equal chance of participating in your survey.

Sharing your survey over a wide range of distribution channels is critical. Whether you share it using a direct link, via email, embed it on your website, or send out paper copies, posting it on social media is not your only option.

If you’re willing to invest in a CCaaS (Contact Center as a Service) platform to act as a hub for customer interactions, you can reach out to participants who don’t engage with more modern tech over the phone.

4. Adding control questions

An effective way for surveyors to identify if respondents have submitted to extreme response bias is to add control questions to a questionnaire. These questions can be as simple as asking respondents to choose option 5 if you’re reading this statement. The most commonly used method is to ask a question twice by paraphrasing it.

For example, you might ask respondents, How satisfied are you with our services? early in the survey and, later, On a scale of 1-10, how would you rate your satisfaction with our services?

To enhance survey analysis and mitigate bias, consider leveraging advanced tools like artificial intelligence. AI can offer valuable insights into the customer experience by analyzing survey responses for patterns and trends. By harnessing the power of artificial intelligence customer experience analysis, businesses can make more informed decisions that boost overall satisfaction and loyalty.

How to mitigate existing survey response bias

The task of minimizing the effects of survey response bias doesn’t stop when your data has been collected. Post-survey adjustments and statistical techniques can help further mitigate the impact of bias even after the survey has been conducted.

Implementing the techniques below will get you closer to reliable survey report results.

1. Post-survey adjustments

These are methods used to correct potential bias in results that might have occurred during data collection. Such techniques include data weighting and imputation. Data weighting adjusts survey results to represent the overall population more accurately.

Researchers apply weights to demographic characteristics such as age, location, and gender to account for any differences between those who choose to participate or not participate in research. Imputation involves replacing missing data with substituted values to obtain a complete dataset and, therefore, reduce the likelihood of bias.

2. Statistical techniques

These refer to a range of mathematical methods, such as regression analysis or propensity score matching, that can be used to adjust, analyze, and interpret survey data. These techniques help to identify and control for bias, enabling you to come to more accurate and reliable conclusions.

3. Use of follow-up surveys

After your initial survey, follow-up surveys can be conducted to determine the reliability and validity of your findings and investigate these further. Follow-up surveys can help mitigate response bias by providing another layer of data and allowing researchers the opportunity to address any discrepancies or unexpected results.

Conclusion

Surveys are a great way to carry out market research, but if responses are infected by bias, your research results and any subsequent analysis of these will be useless. It’s, therefore, crucial to understand how response bias can surface and how to mitigate it, both in structuring your survey and, later, in analyzing the data you’ve gathered.

In future surveys, you must arm yourself with the tools and strategies to derive valuable insights from your research initiatives and make the best decisions for your business!

Sena is a content writer at forms.app. She likes to read and write articles on different topics. Sena also likes to learn about different cultures and travel. She likes to study and learn different languages. Her specialty is linguistics, surveys, survey questions, and sampling methods.